线下压测平台联调,测试都挺顺利的,因为平台部署,以及JMeter的master和slave节点都在同一个环境,不存在任何网络问题,可今天折腾发布环境,的确是和我想得不太一样

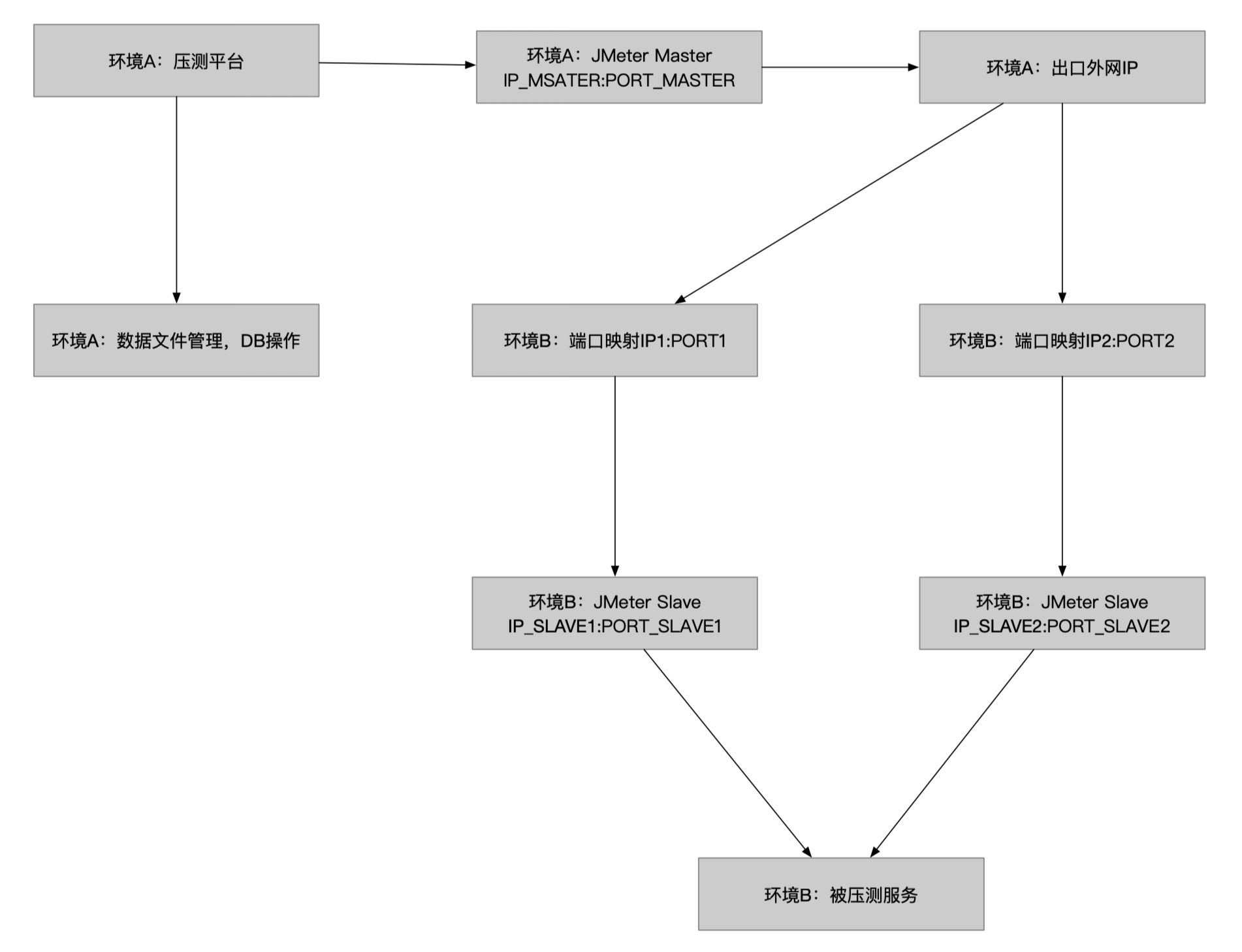

要求是平台的一些数据,入库都在环境A,而环境B是是一个压测环境,专门可以部署被压测服务的,而一开始没想压测服务以及压力机还能跨环境的,整体开发考虑到master节点只是起一个控制调度的作用,负载要求还好,因此将压测平台和master节点放在同一个节点来考虑的,那么剩下的网络需要互通的就只有master和slave之间互通就行了

正常如果是同一个环境,直接master和所有slave互通,就不需要中间一堆的端口映射,而如今本身环境A和B是不通的,因此他们之间的交互假如无法直接路由网络打通,那就只有端口映射

其实如果考虑将平台服务部署在A,将master的相关jmeter东西放在环境B和slave一起,就不需要考虑他们之间交互,只需要平台服务远程调用master节点压测命令即可,当然这是事后诸葛

言归正传,现在主要是为了说明问题,定位下原因;上图的大致流程就是将不通的网络通过端口映射打通,就不多说了

还好以前做过网络,找各个环境运维按我要求打通之后,特意抓包测试了一下像tcp连接,报文等等,确认无误

但是,现在的问题是,我已经确认网络连接无误,但是在分布式压测的时候,master指定了映射的IP1:PORT1,却无法打到slave节点,错误原因也很奇葩,说连不上IP_SLAVE1:PORT_SLAVE1,这就奇怪了,因为本来master就连不上slave节点,因此才做的端口映射,只需要将包打到IP1:PORT1,就会自动转发到IP_SLAVE1:PORT_SLAVE1,况且我master启动都没指定IP1:PORT1,哪里来的这种报错信息,难不成还动态查找哪些启动了

文字描述太难看了,直接来个例子说明,端口映射11.22.33.44:55555 => 1.2.3.4.1099

slave节点

$ ./jmeter-server -Djava.rmi.server.hostname=1.2.3.4 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/docker/logs/nps/tuya-jmeter/lib/log4j-slf4j-impl-2.13.3.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/docker/logs/nps/tuya-jmeter/lib/ext/jmeter-plugins-dubbo-2.7.8-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Using local port: 1099 Created remote object: UnicastServerRef2 [liveRef: [endpoint:[1.2.3.4:1099](local),objID:[-1a3fbb9b:1771fc07d74:-7fff, 134469626239589308]]]

master节点

$ ./jmeter -n -t baidu.jmx -R 11.22.33.44:55555 -l my.jtl SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/mnt/logs/apps/nbest-perf-service/nps/tuya-jmeter/lib/log4j-slf4j-impl-2.13.3.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/mnt/logs/apps/nbest-perf-service/nps/tuya-jmeter/lib/ext/jmeter-plugins-dubbo-2.7.8-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Creating summariser Created the tree successfully using baidu.jmx Configuring remote engine: 11.22.33.44:55555 Starting distributed test with remote engines: [11.22.33.44:55555] @ Wed Jan 20 20:56:37 CST 2021 (1611147397067) Error in rconfigure() method java.rmi.ConnectException: Connection refused to host: 1.2.3.4; nested exception is: java.net.ConnectException: Connection refused (Connection refused) Remote engines have been started:[] The following remote engines have not started:[11.22.33.44:55555] Waiting for possible Shutdown/StopTestNow/HeapDump/ThreadDump message on port 4445

最奇怪的就是Error那行,Connection refused to host: 1.2.3.4,我都没指定过1.2.3.4,唯一的来源就是master探测到了slave节点jmeter-server进程起来了,并且指定了remote ip是1.2.3.4,但是因为这个ip是无法访问成功的,说明指定的-R 11.22.33.44:55555映射生效了,可是端口映射生效了应该就直接转发到1.2.3.4:1099才对,为什么会报连不上呢,各种猜测,网上也没答案,只有看下JMeter源码,具体的报错信息了,这也是第一次看源代码

首先是rconfigure()这个方法报错,调用了一个rmi超时了,理解一下应该是master节点远程调用slave节点进行数据交互,不通超时;先就不用管了,正好下面有一行,执行的engine为空:Remote engines have been started:[]

/**

* Starts a remote testing engines

*

* @param addresses list of the DNS names or IP addresses of the remote testing engines

*/

@SuppressWarnings("JdkObsolete")

public void start(List<String> addresses) {

long now = System.currentTimeMillis();

println("Starting distributed test with remote engines: " + addresses + " @ " + new Date(now) + " (" + now + ")");

List<String> startedEngines = new ArrayList<>(addresses.size());

List<String> failedEngines = new ArrayList<>(addresses.size());

for (String address : addresses) {

JMeterEngine engine = engines.get(address);

try {

if (engine != null) {

engine.runTest();

startedEngines.add(address);

} else {

log.warn(HOST_NOT_FOUND_MESSAGE, address);

failedEngines.add(address);

}

} catch (IllegalStateException | JMeterEngineException e) { // NOSONAR already reported to user

failedEngines.add(address);

JMeterUtils.reportErrorToUser(e.getMessage(), JMeterUtils.getResString("remote_error_starting")); // $NON-NLS-1$

}

}

println("Remote engines have been started:" + startedEngines);

if (!failedEngines.isEmpty()) {

errln("The following remote engines have not started:" + failedEngines);

}

}

接着看,这里的startedEngines为空,说明engine为空,而engines是一个map

private final Map<String, JMeterEngine> engines = new HashMap<>();

看下他在哪里有更新

public void init(List<String> addresses, HashTree tree) {

// converting list into mutable version

List<String> addrs = new ArrayList<>(addresses);

for (int tryNo = 0; tryNo < retriesNumber; tryNo++) {

if (tryNo > 0) {

println("Following remote engines will retry configuring: " + addrs+", pausing before retry for " + retriesDelay + "ms");

try {

Thread.sleep(retriesDelay);

} catch (InterruptedException e) { // NOSONAR

throw new IllegalStateException("Interrupted while initializing remote engines:"+addrs, e);

}

}

int idx = 0;

while (idx < addrs.size()) {

String address = addrs.get(idx);

println("Configuring remote engine: " + address);

JMeterEngine engine = getClientEngine(address.trim(), tree);

if (engine != null) {

engines.put(address, engine);

addrs.remove(address);

} else {

println("Failed to configure " + address);

idx++;

}

}

if (addrs.isEmpty()) {

break;

}

}

if (!addrs.isEmpty()) {

String msg = "Following remote engines could not be configured:" + addrs;

if (!continueOnFail || engines.isEmpty()) {

stop();

throw new RuntimeException(msg); // NOSONAR

} else {

println(msg);

println("Continuing without failed engines...");

}

}

}

其中下面这行,在报错的地方有打印出address就是我们配置的ip和port

println("Configuring remote engine: " + address);

而下面这行,有打印这个ip和port没有configured

String msg = "Following remote engines could not be configured:" + addrs;

说明问题在addrs不为空,那么engines这里为空,说明engine为空,因此急需看getClientEngine

private JMeterEngine getClientEngine(String address, HashTree testTree) {

JMeterEngine engine;

try {

engine = createEngine(address);

engine.configure(testTree);

if (!remoteProps.isEmpty()) {

engine.setProperties(remoteProps);

}

return engine;

} catch (Exception ex) {

log.error("Failed to create engine at {}", address, ex);

JMeterUtils.reportErrorToUser(ex.getMessage(),

JMeterUtils.getResString("remote_error_init") + ": " + address); // $NON-NLS-1$ $NON-NLS-2$

return null;

}

}

既然是返回null,那应该是抛异常了,因此继续看createEngine

/**

* A factory method that might be overridden for unit testing

*

* @param address address for engine

* @return engine instance

* @throws RemoteException if registry can't be contacted

* @throws NotBoundException when name for address can't be found

*/

protected JMeterEngine createEngine(String address) throws RemoteException, NotBoundException {

return new ClientJMeterEngine(address);

}

继续

public ClientJMeterEngine(String hostAndPort) throws NotBoundException, RemoteException {

this.remote = getEngine(hostAndPort);

this.hostAndPort = hostAndPort;

}

hostAndPort已经确认有值了,那就看getEngine

private static RemoteJMeterEngine getEngine(String hostAndPort)

throws RemoteException, NotBoundException {

final String name = RemoteJMeterEngineImpl.JMETER_ENGINE_RMI_NAME; // $NON-NLS-1$ $NON-NLS-2$

String host = hostAndPort;

int port = RmiUtils.DEFAULT_RMI_PORT;

int indexOfSeparator = hostAndPort.indexOf(':');

if (indexOfSeparator >= 0) {

host = hostAndPort.substring(0, indexOfSeparator);

String portAsString = hostAndPort.substring(indexOfSeparator+1);

port = Integer.parseInt(portAsString);

}

Registry registry = LocateRegistry.getRegistry(

host,

port,

RmiUtils.createClientSocketFactory());

Remote remobj = registry.lookup(name);

if (remobj instanceof RemoteJMeterEngine){

final RemoteJMeterEngine rje = (RemoteJMeterEngine) remobj;

if (remobj instanceof RemoteObject){

RemoteObject robj = (RemoteObject) remobj;

System.out.println("Using remote object: "+robj.getRef().remoteToString()); // NOSONAR

}

return rje;

}

throw new RemoteException("Could not find "+name);

}

可以看到这里是远程调用,最终master就是这样来控制slave节点进行一个分布式压测,lookup查找获取slave远程注册表对象,因此问题可能就是没有获取到,或者是由于网络问题没有查找到

那么问题其实就在slave节点,其实我启动jmeter-server的时候已经制定了-Djava.rmi.server.hostname=,但是由于这里是端口映射,因此这个IP制定的是中转IP和端口,那么可能导致jmeter这种分布式压测方式在某个地方没有完全打通,试过将所有隐射端口全部开放,还是报这个错误,而且master和slave在同一个环境网络下,是没有这个问题的,因此应该还是这种网络打通方式的问题,暂时没有进一步研究实质原因是啥,有空再继续研究下