上次GLM-4微调龟速,因为没法用CUDA生态,因此本地Mac直接用一下Apple的MLX框架来进行微调测试,实验通过

下面是微调的简单测试,大致的流程

1、数据集

因为是测试,随手写了一些简单问答,就像上次外挂知识库一样

{"prompt": "明天什么天气", "completion": "大雪天"}

{"prompt": "1+1=?", "completion": "11"}

{"prompt": "为什么我的眼睛里总是总满了泪水", "completion": "干眼症"}

{"prompt": "打羽毛球消费高吗", "completion": "贵得要死"}

{"prompt": "今天心情如何", "completion": "心如死灰"}2、微调

因为我本地是MacOS,因此直接用Apple的MLX框架来进行微调,指定原始huggingface模型和数据集data目录即可

(mlx) $ mlx_lm.lora --model /Users/lihui/Work/models/huggingface/llm/Qwen/Qwen2-0.5B-Instruct --train --data ./data

3、合并

微调结束后,再和原始模型进行合并,生成最终的完整模型

(mlx) $ mlx_lm.fuse --model /Users/lihui/Work/models/huggingface/llm/Qwen/Qwen2-0.5B-Instruct --adapter-path adapters --save-path qwen2-0.5b-train

Loading pretrained model

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.4、验证

通过最终完整的模型来做一下验证,测试微调的效果

(mlx) $ mlx_lm.generate --model qwen2-0.5b-train --prompt "明天天气如何"

有了前面的测试,可以找一些大的数据集来一些专项微调,比如医疗问答

1、数据集

找了个开源中文医疗数据集,虽然有点老,但数量有快100W,做一下格式转换,变成微调数据集

Github:https://github.com/100ZZ/Chinese-medical-dialogue2、微调和合并

这里大模型还是用的Qwen2-0.5B-Instruct,参数多一点就忙不过来,当然效果肯定会更好些

(mlx) $ mlx_lm.lora --model /Users/lihui/Work/models/huggingface/llm/Qwen/Qwen2-0.5B-Instruct --train --data ./data

Loading pretrained model

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Loading datasets

Training

Trainable parameters: 0.073% (0.360M/494.033M)

Starting training..., iters: 1000

Iter 1: Val loss 3.124, Val took 11.096s

Iter 10: Train loss 3.094, Learning Rate 1.000e-05, It/sec 1.141, Tokens/sec 869.235, Trained Tokens 7620, Peak mem 8.727 GB

Iter 20: Train loss 2.683, Learning Rate 1.000e-05, It/sec 2.210, Tokens/sec 1408.860, Trained Tokens 13995, Peak mem 8.727 GB

Iter 30: Train loss 2.618, Learning Rate 1.000e-05, It/sec 1.588, Tokens/sec 1355.005, Trained Tokens 22527, Peak mem 12.677 GB

Iter 40: Train loss 2.397, Learning Rate 1.000e-05, It/sec 2.000, Tokens/sec 1238.289, Trained Tokens 28717, Peak mem 12.677 GB

Iter 50: Train loss 2.403, Learning Rate 1.000e-05, It/sec 1.376, Tokens/sec 918.123, Trained Tokens 35391, Peak mem 12.677 GB

Iter 60: Train loss 2.295, Learning Rate 1.000e-05, It/sec 1.536, Tokens/sec 877.341, Trained Tokens 41101, Peak mem 12.677 GB

Iter 70: Train loss 2.483, Learning Rate 1.000e-05, It/sec 3.104, Tokens/sec 1897.341, Trained Tokens 47214, Peak mem 12.677 GB

Iter 80: Train loss 2.412, Learning Rate 1.000e-05, It/sec 2.519, Tokens/sec 1396.554, Trained Tokens 52758, Peak mem 12.677 GB

Iter 90: Train loss 2.028, Learning Rate 1.000e-05, It/sec 2.126, Tokens/sec 1390.519, Trained Tokens 59299, Peak mem 12.677 GB

Iter 100: Train loss 2.426, Learning Rate 1.000e-05, It/sec 2.609, Tokens/sec 1447.153, Trained Tokens 64846, Peak mem 12.677 GB

Iter 100: Saved adapter weights to adapters/adapters.safetensors and adapters/0000100_adapters.safetensors.

Iter 110: Train loss 2.366, Learning Rate 1.000e-05, It/sec 1.434, Tokens/sec 1048.349, Trained Tokens 72158, Peak mem 12.677 GB

Iter 120: Train loss 2.643, Learning Rate 1.000e-05, It/sec 1.390, Tokens/sec 954.298, Trained Tokens 79021, Peak mem 12.677 GB

Iter 130: Train loss 2.359, Learning Rate 1.000e-05, It/sec 1.176, Tokens/sec 1092.142, Trained Tokens 88304, Peak mem 16.624 GB

Iter 140: Train loss 2.352, Learning Rate 1.000e-05, It/sec 1.265, Tokens/sec 1043.898, Trained Tokens 96558, Peak mem 16.624 GB

Iter 150: Train loss 2.452, Learning Rate 1.000e-05, It/sec 1.485, Tokens/sec 988.449, Trained Tokens 103215, Peak mem 16.624 GB

Iter 160: Train loss 2.171, Learning Rate 1.000e-05, It/sec 1.320, Tokens/sec 1115.297, Trained Tokens 111666, Peak mem 16.624 GB

Iter 170: Train loss 2.242, Learning Rate 1.000e-05, It/sec 1.754, Tokens/sec 1056.211, Trained Tokens 117687, Peak mem 16.624 GB

Iter 180: Train loss 2.243, Learning Rate 1.000e-05, It/sec 1.043, Tokens/sec 639.487, Trained Tokens 123821, Peak mem 16.624 GB

Iter 190: Train loss 2.232, Learning Rate 1.000e-05, It/sec 2.063, Tokens/sec 1199.389, Trained Tokens 129635, Peak mem 16.624 GB

Iter 200: Val loss 2.352, Val took 17.364s

Iter 200: Train loss 2.556, Learning Rate 1.000e-05, It/sec 35.312, Tokens/sec 22546.856, Trained Tokens 136020, Peak mem 16.624 GB

Iter 200: Saved adapter weights to adapters/adapters.safetensors and adapters/0000200_adapters.safetensors.

Iter 210: Train loss 2.356, Learning Rate 1.000e-05, It/sec 1.762, Tokens/sec 1203.480, Trained Tokens 142852, Peak mem 16.624 GB

Iter 220: Train loss 2.325, Learning Rate 1.000e-05, It/sec 2.040, Tokens/sec 1395.629, Trained Tokens 149694, Peak mem 16.624 GB

Iter 230: Train loss 2.392, Learning Rate 1.000e-05, It/sec 1.984, Tokens/sec 1156.813, Trained Tokens 155524, Peak mem 16.624 GB

Iter 240: Train loss 2.214, Learning Rate 1.000e-05, It/sec 1.961, Tokens/sec 1115.525, Trained Tokens 161213, Peak mem 16.624 GB

Iter 250: Train loss 2.307, Learning Rate 1.000e-05, It/sec 1.773, Tokens/sec 1381.095, Trained Tokens 169002, Peak mem 16.624 GB

Iter 260: Train loss 2.339, Learning Rate 1.000e-05, It/sec 1.938, Tokens/sec 1065.749, Trained Tokens 174502, Peak mem 16.624 GB

Iter 270: Train loss 2.045, Learning Rate 1.000e-05, It/sec 1.878, Tokens/sec 1326.520, Trained Tokens 181564, Peak mem 16.624 GB

Iter 280: Train loss 2.215, Learning Rate 1.000e-05, It/sec 1.636, Tokens/sec 1169.647, Trained Tokens 188712, Peak mem 16.624 GB

Iter 290: Train loss 2.398, Learning Rate 1.000e-05, It/sec 2.435, Tokens/sec 1383.945, Trained Tokens 194395, Peak mem 16.624 GB

Iter 300: Train loss 2.276, Learning Rate 1.000e-05, It/sec 1.885, Tokens/sec 1316.467, Trained Tokens 201379, Peak mem 16.624 GB

Iter 300: Saved adapter weights to adapters/adapters.safetensors and adapters/0000300_adapters.safetensors.

Iter 310: Train loss 2.019, Learning Rate 1.000e-05, It/sec 3.024, Tokens/sec 1824.179, Trained Tokens 207411, Peak mem 16.624 GB

Iter 320: Train loss 2.214, Learning Rate 1.000e-05, It/sec 1.911, Tokens/sec 1354.781, Trained Tokens 214500, Peak mem 16.624 GB

Iter 330: Train loss 2.395, Learning Rate 1.000e-05, It/sec 1.583, Tokens/sec 1207.635, Trained Tokens 222129, Peak mem 16.624 GB

Iter 340: Train loss 2.249, Learning Rate 1.000e-05, It/sec 2.129, Tokens/sec 1240.204, Trained Tokens 227955, Peak mem 16.624 GB

Iter 350: Train loss 2.323, Learning Rate 1.000e-05, It/sec 1.749, Tokens/sec 1143.538, Trained Tokens 234493, Peak mem 16.624 GB

Iter 360: Train loss 2.174, Learning Rate 1.000e-05, It/sec 2.161, Tokens/sec 1342.160, Trained Tokens 240704, Peak mem 16.624 GB

Iter 370: Train loss 2.272, Learning Rate 1.000e-05, It/sec 4.033, Tokens/sec 2151.017, Trained Tokens 246037, Peak mem 16.624 GB

Iter 380: Train loss 1.896, Learning Rate 1.000e-05, It/sec 2.796, Tokens/sec 1475.982, Trained Tokens 251315, Peak mem 16.624 GB

Iter 390: Train loss 1.863, Learning Rate 1.000e-05, It/sec 4.384, Tokens/sec 2079.200, Trained Tokens 256058, Peak mem 16.624 GB

Iter 400: Val loss 2.404, Val took 13.340s

Iter 400: Train loss 2.445, Learning Rate 1.000e-05, It/sec 25.542, Tokens/sec 16413.409, Trained Tokens 262484, Peak mem 16.624 GB

Iter 400: Saved adapter weights to adapters/adapters.safetensors and adapters/0000400_adapters.safetensors.

Iter 410: Train loss 2.349, Learning Rate 1.000e-05, It/sec 0.941, Tokens/sec 550.681, Trained Tokens 268333, Peak mem 16.624 GB

Iter 420: Train loss 2.353, Learning Rate 1.000e-05, It/sec 1.366, Tokens/sec 944.161, Trained Tokens 275247, Peak mem 16.624 GB

Iter 430: Train loss 2.170, Learning Rate 1.000e-05, It/sec 2.231, Tokens/sec 1387.083, Trained Tokens 281463, Peak mem 16.624 GB

Iter 440: Train loss 2.229, Learning Rate 1.000e-05, It/sec 2.997, Tokens/sec 1876.160, Trained Tokens 287723, Peak mem 16.624 GB

Iter 450: Train loss 2.059, Learning Rate 1.000e-05, It/sec 3.882, Tokens/sec 2243.147, Trained Tokens 293501, Peak mem 16.624 GB

Iter 460: Train loss 2.169, Learning Rate 1.000e-05, It/sec 1.767, Tokens/sec 1258.703, Trained Tokens 300623, Peak mem 16.624 GB

Iter 470: Train loss 2.348, Learning Rate 1.000e-05, It/sec 1.943, Tokens/sec 1388.030, Trained Tokens 307765, Peak mem 16.624 GB

Iter 480: Train loss 2.477, Learning Rate 1.000e-05, It/sec 3.042, Tokens/sec 1862.633, Trained Tokens 313889, Peak mem 16.624 GB

Iter 490: Train loss 2.497, Learning Rate 1.000e-05, It/sec 1.716, Tokens/sec 1194.373, Trained Tokens 320851, Peak mem 16.624 GB

Iter 500: Train loss 2.312, Learning Rate 1.000e-05, It/sec 1.454, Tokens/sec 1364.874, Trained Tokens 330241, Peak mem 16.624 GB

Iter 500: Saved adapter weights to adapters/adapters.safetensors and adapters/0000500_adapters.safetensors.

Iter 510: Train loss 2.280, Learning Rate 1.000e-05, It/sec 1.876, Tokens/sec 1143.900, Trained Tokens 336339, Peak mem 16.624 GB

Iter 520: Train loss 2.126, Learning Rate 1.000e-05, It/sec 2.082, Tokens/sec 1229.904, Trained Tokens 342245, Peak mem 16.624 GB

Iter 530: Train loss 2.334, Learning Rate 1.000e-05, It/sec 1.517, Tokens/sec 1176.856, Trained Tokens 350005, Peak mem 16.624 GB

Iter 540: Train loss 2.199, Learning Rate 1.000e-05, It/sec 2.656, Tokens/sec 1637.412, Trained Tokens 356171, Peak mem 16.624 GB

Iter 550: Train loss 2.097, Learning Rate 1.000e-05, It/sec 1.843, Tokens/sec 1246.708, Trained Tokens 362934, Peak mem 16.624 GB

Iter 560: Train loss 2.120, Learning Rate 1.000e-05, It/sec 2.059, Tokens/sec 1224.648, Trained Tokens 368882, Peak mem 16.624 GB

Iter 570: Train loss 2.273, Learning Rate 1.000e-05, It/sec 2.000, Tokens/sec 1453.026, Trained Tokens 376147, Peak mem 16.624 GB

Iter 580: Train loss 2.178, Learning Rate 1.000e-05, It/sec 2.220, Tokens/sec 1353.278, Trained Tokens 382244, Peak mem 16.624 GB

Iter 590: Train loss 2.080, Learning Rate 1.000e-05, It/sec 2.168, Tokens/sec 1071.320, Trained Tokens 387186, Peak mem 16.624 GB

Iter 600: Val loss 2.169, Val took 12.341s

Iter 600: Train loss 2.038, Learning Rate 1.000e-05, It/sec 55.931, Tokens/sec 23804.291, Trained Tokens 391442, Peak mem 16.624 GB

Iter 600: Saved adapter weights to adapters/adapters.safetensors and adapters/0000600_adapters.safetensors.

Iter 610: Train loss 2.248, Learning Rate 1.000e-05, It/sec 0.997, Tokens/sec 646.073, Trained Tokens 397920, Peak mem 16.624 GB

Iter 620: Train loss 2.260, Learning Rate 1.000e-05, It/sec 2.245, Tokens/sec 1227.927, Trained Tokens 403389, Peak mem 16.624 GB

Iter 630: Train loss 2.370, Learning Rate 1.000e-05, It/sec 1.593, Tokens/sec 1148.811, Trained Tokens 410602, Peak mem 16.624 GB

Iter 640: Train loss 2.010, Learning Rate 1.000e-05, It/sec 2.057, Tokens/sec 1101.370, Trained Tokens 415957, Peak mem 16.624 GB

Iter 650: Train loss 2.521, Learning Rate 1.000e-05, It/sec 1.219, Tokens/sec 1230.246, Trained Tokens 426046, Peak mem 19.563 GB

Iter 660: Train loss 2.359, Learning Rate 1.000e-05, It/sec 1.655, Tokens/sec 1072.865, Trained Tokens 432528, Peak mem 19.563 GB

Iter 670: Train loss 2.143, Learning Rate 1.000e-05, It/sec 1.775, Tokens/sec 1272.313, Trained Tokens 439696, Peak mem 19.563 GB

Iter 680: Train loss 2.340, Learning Rate 1.000e-05, It/sec 1.690, Tokens/sec 1167.810, Trained Tokens 446608, Peak mem 19.563 GB

Iter 690: Train loss 2.159, Learning Rate 1.000e-05, It/sec 1.605, Tokens/sec 989.254, Trained Tokens 452771, Peak mem 19.563 GB

Iter 700: Train loss 2.440, Learning Rate 1.000e-05, It/sec 1.332, Tokens/sec 1160.377, Trained Tokens 461483, Peak mem 19.563 GB

Iter 700: Saved adapter weights to adapters/adapters.safetensors and adapters/0000700_adapters.safetensors.

Iter 710: Train loss 2.047, Learning Rate 1.000e-05, It/sec 1.688, Tokens/sec 1053.941, Trained Tokens 467727, Peak mem 19.563 GB

Iter 720: Train loss 2.213, Learning Rate 1.000e-05, It/sec 1.501, Tokens/sec 1157.829, Trained Tokens 475443, Peak mem 19.563 GB

Iter 730: Train loss 2.108, Learning Rate 1.000e-05, It/sec 2.109, Tokens/sec 1315.582, Trained Tokens 481681, Peak mem 19.563 GB

Iter 740: Train loss 2.194, Learning Rate 1.000e-05, It/sec 1.637, Tokens/sec 1121.043, Trained Tokens 488530, Peak mem 19.563 GB

Iter 750: Train loss 2.525, Learning Rate 1.000e-05, It/sec 1.458, Tokens/sec 1129.076, Trained Tokens 496276, Peak mem 19.563 GB

Iter 760: Train loss 2.113, Learning Rate 1.000e-05, It/sec 1.997, Tokens/sec 1184.060, Trained Tokens 502204, Peak mem 19.563 GB

Iter 770: Train loss 2.036, Learning Rate 1.000e-05, It/sec 2.314, Tokens/sec 1330.956, Trained Tokens 507956, Peak mem 19.563 GB

Iter 780: Train loss 2.383, Learning Rate 1.000e-05, It/sec 1.669, Tokens/sec 1303.686, Trained Tokens 515768, Peak mem 19.563 GB

Iter 790: Train loss 2.396, Learning Rate 1.000e-05, It/sec 1.898, Tokens/sec 1310.890, Trained Tokens 522673, Peak mem 19.563 GB

Iter 800: Val loss 2.242, Val took 12.262s

Iter 800: Train loss 2.152, Learning Rate 1.000e-05, It/sec 19.213, Tokens/sec 11858.130, Trained Tokens 528845, Peak mem 19.563 GB

Iter 800: Saved adapter weights to adapters/adapters.safetensors and adapters/0000800_adapters.safetensors.

Iter 810: Train loss 2.500, Learning Rate 1.000e-05, It/sec 1.061, Tokens/sec 786.734, Trained Tokens 536258, Peak mem 19.563 GB

Iter 820: Train loss 2.197, Learning Rate 1.000e-05, It/sec 2.225, Tokens/sec 1393.770, Trained Tokens 542522, Peak mem 19.563 GB

Iter 830: Train loss 2.255, Learning Rate 1.000e-05, It/sec 1.425, Tokens/sec 1132.581, Trained Tokens 550469, Peak mem 19.563 GB

Iter 840: Train loss 2.241, Learning Rate 1.000e-05, It/sec 1.335, Tokens/sec 940.447, Trained Tokens 557515, Peak mem 19.563 GB

Iter 850: Train loss 2.000, Learning Rate 1.000e-05, It/sec 2.260, Tokens/sec 1384.089, Trained Tokens 563640, Peak mem 19.563 GB

Iter 860: Train loss 2.270, Learning Rate 1.000e-05, It/sec 2.234, Tokens/sec 1403.861, Trained Tokens 569924, Peak mem 19.563 GB

Iter 870: Train loss 2.250, Learning Rate 1.000e-05, It/sec 1.649, Tokens/sec 1255.475, Trained Tokens 577538, Peak mem 19.563 GB

Iter 880: Train loss 2.472, Learning Rate 1.000e-05, It/sec 1.348, Tokens/sec 1234.302, Trained Tokens 586695, Peak mem 19.563 GB

Iter 890: Train loss 2.070, Learning Rate 1.000e-05, It/sec 2.166, Tokens/sec 1251.259, Trained Tokens 592472, Peak mem 19.563 GB

Iter 900: Train loss 2.316, Learning Rate 1.000e-05, It/sec 2.552, Tokens/sec 1488.881, Trained Tokens 598306, Peak mem 19.563 GB

Iter 900: Saved adapter weights to adapters/adapters.safetensors and adapters/0000900_adapters.safetensors.

Iter 910: Train loss 2.160, Learning Rate 1.000e-05, It/sec 2.234, Tokens/sec 1344.204, Trained Tokens 604324, Peak mem 19.563 GB

Iter 920: Train loss 2.591, Learning Rate 1.000e-05, It/sec 2.190, Tokens/sec 1554.924, Trained Tokens 611424, Peak mem 19.563 GB

Iter 930: Train loss 2.097, Learning Rate 1.000e-05, It/sec 2.112, Tokens/sec 1345.441, Trained Tokens 617793, Peak mem 19.563 GB

Iter 940: Train loss 2.032, Learning Rate 1.000e-05, It/sec 2.066, Tokens/sec 1289.223, Trained Tokens 624033, Peak mem 19.563 GB

Iter 950: Train loss 2.179, Learning Rate 1.000e-05, It/sec 1.766, Tokens/sec 1172.334, Trained Tokens 630673, Peak mem 19.563 GB

Iter 960: Train loss 2.460, Learning Rate 1.000e-05, It/sec 2.124, Tokens/sec 1466.610, Trained Tokens 637577, Peak mem 19.563 GB

Iter 970: Train loss 2.454, Learning Rate 1.000e-05, It/sec 1.264, Tokens/sec 1176.553, Trained Tokens 646887, Peak mem 19.563 GB

Iter 980: Train loss 2.473, Learning Rate 1.000e-05, It/sec 1.572, Tokens/sec 912.267, Trained Tokens 652692, Peak mem 19.563 GB

Iter 990: Train loss 2.228, Learning Rate 1.000e-05, It/sec 1.679, Tokens/sec 1181.333, Trained Tokens 659726, Peak mem 19.563 GB

Iter 1000: Val loss 2.371, Val took 13.392s

Iter 1000: Train loss 1.908, Learning Rate 1.000e-05, It/sec 67.483, Tokens/sec 36589.059, Trained Tokens 665148, Peak mem 19.563 GB

Iter 1000: Saved adapter weights to adapters/adapters.safetensors and adapters/0001000_adapters.safetensors.

Saved final adapter weights to adapters/adapters.safetensors.

(mlx) $ mlx_lm.fuse --model /Users/lihui/Work/models/huggingface/llm/Qwen/Qwen2-0.5B-Instruct --adapter-path adapters --save-path qwen2-0.5b-train

Loading pretrained model

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.3、问答

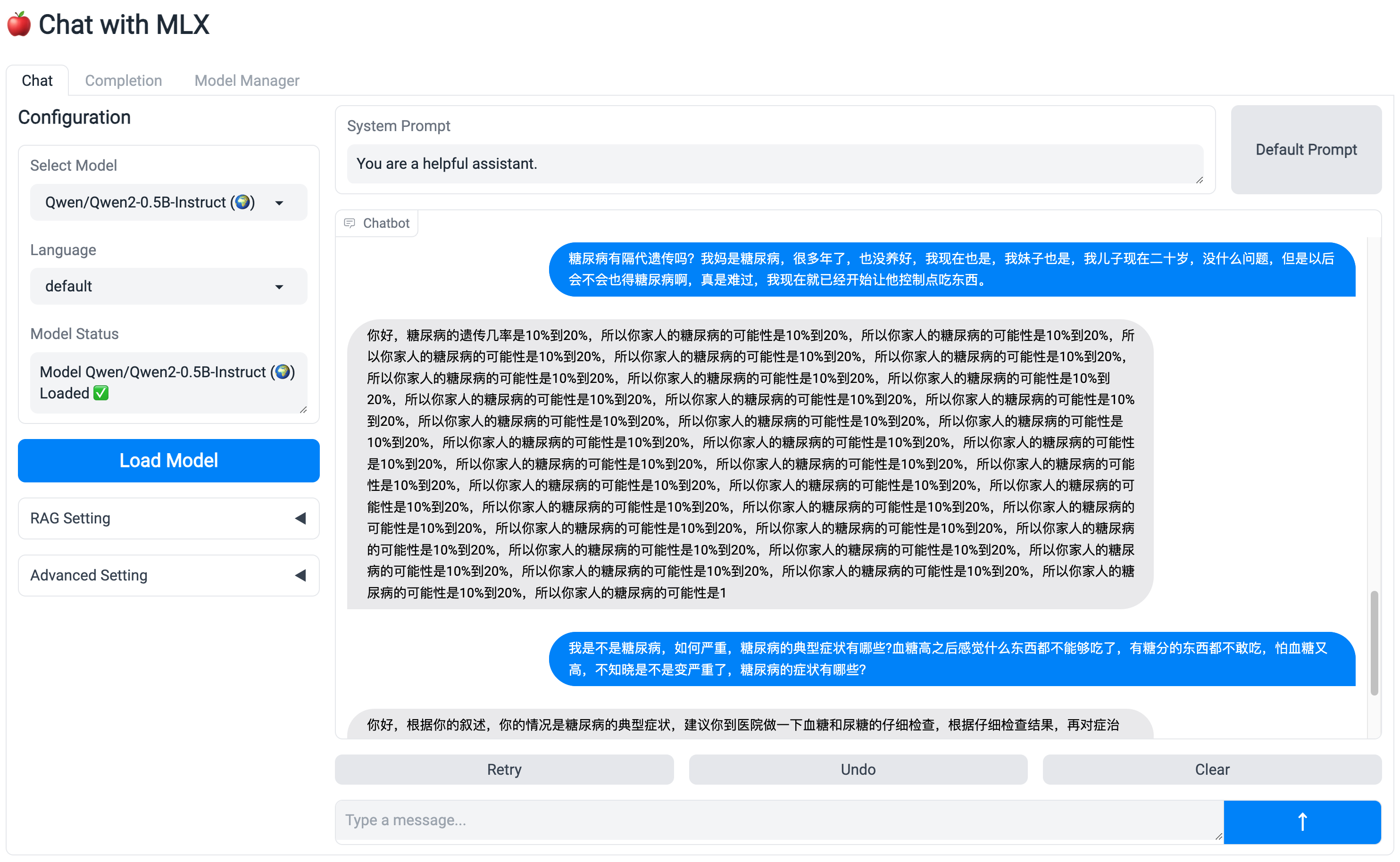

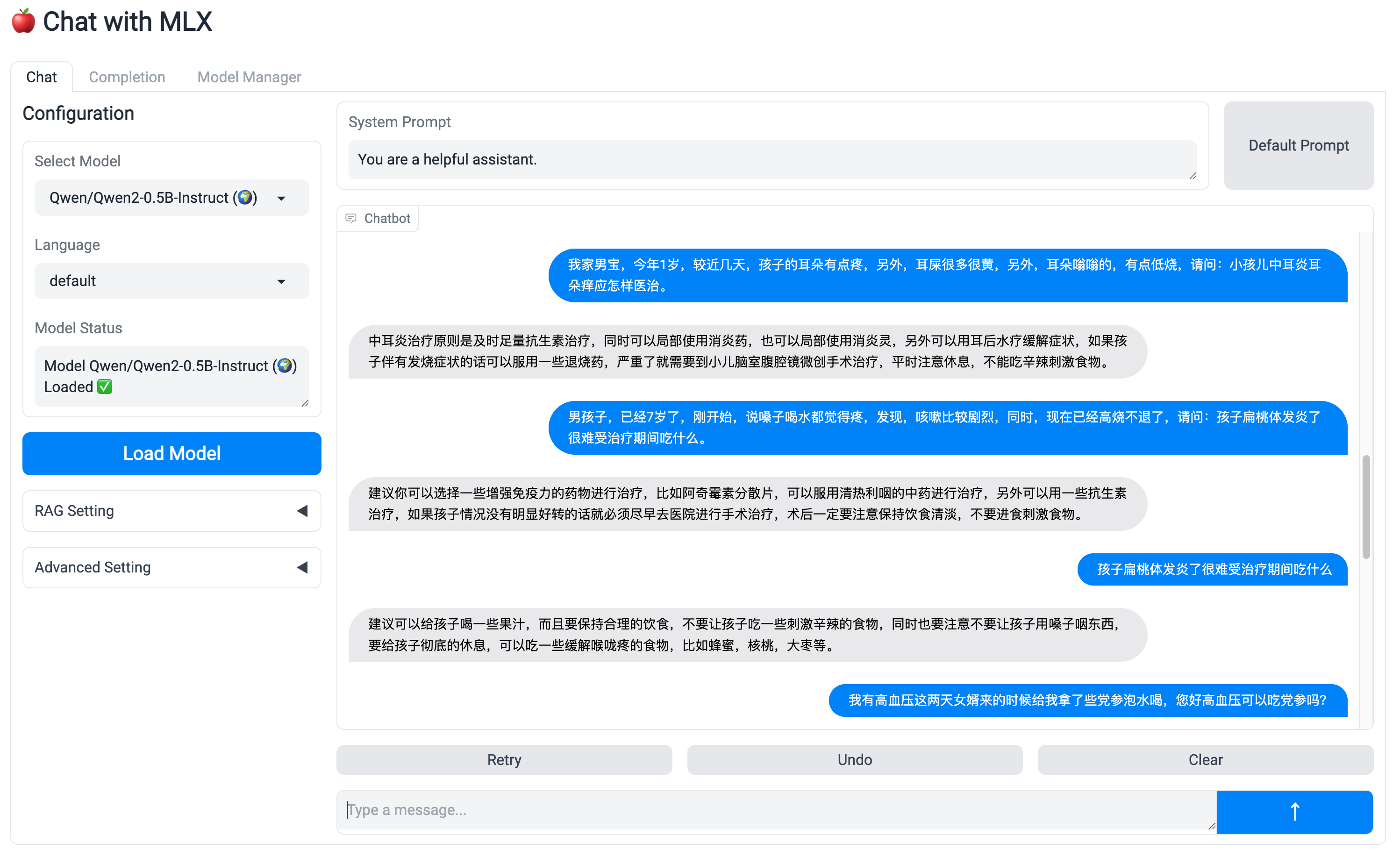

我这里直接用chat-with-mlx来执行,虽然配置yaml文件有点奇怪,但好歹可以web操作

先随便问几种症状

然后找几个数据集里的问题

测试过程中,出现了回答无限循环的情况,可见参数量少的小模型这种问题挺多的